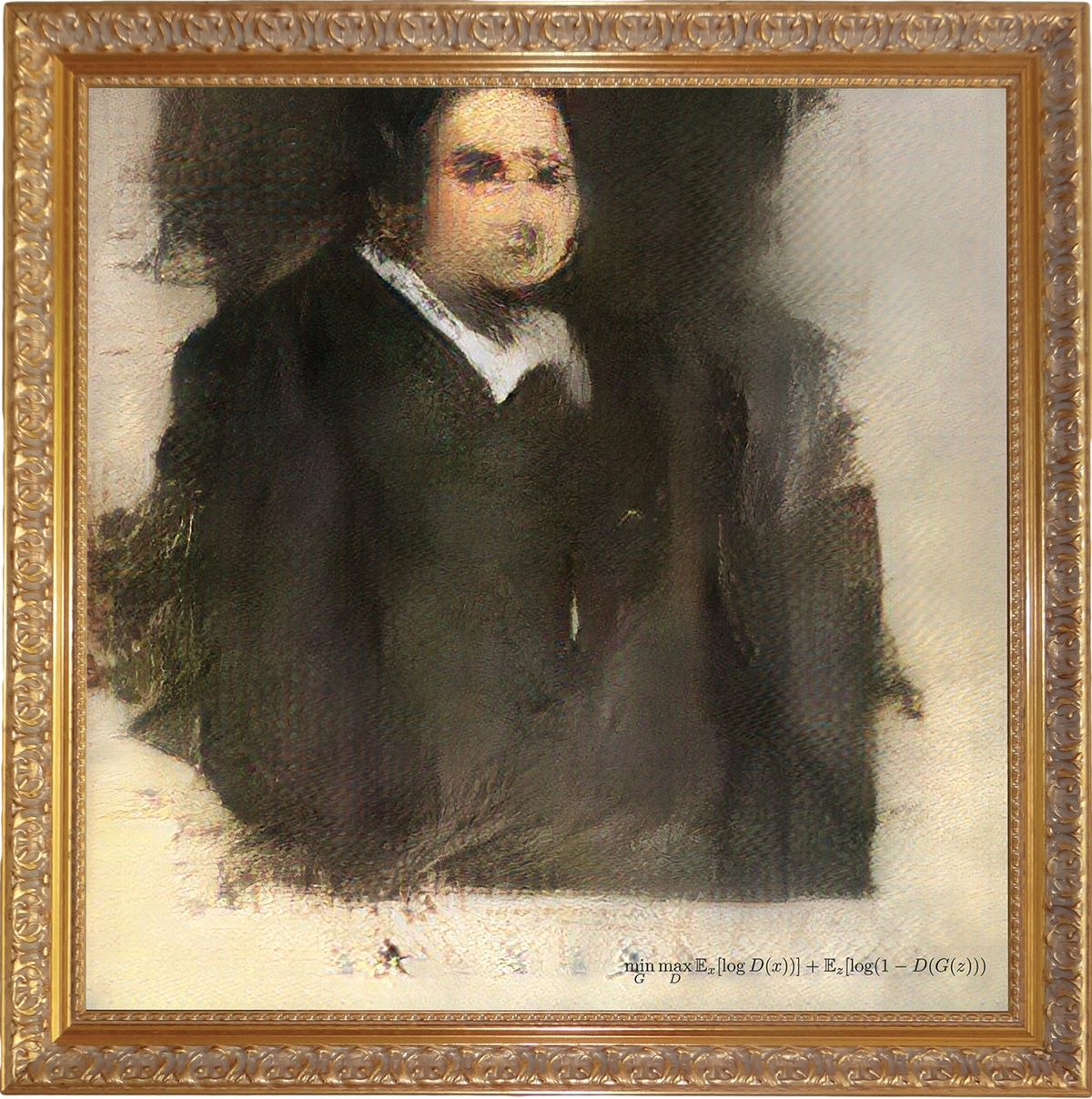

This October in New York, Christie’s will become the first auction house to sell a work created by artificial intelligence (AI). The 2018 painting, a hazy portrait of what appears to be a well-fed clergyman, possibly French, hailing from an indeterminate period in history, is expected to fetch between $7,000 and $10,000. His name, according to the work’s title, is Edmond Belamy.

Even more curious is the signature scrawled on the bottom right of the canvas, which reads: min G max D ?x [log (D(x))] + ?z [log(1 – D (G(z)))], and refers to the algorithm, or generative adversarial network (GAN), that produced the work.

Signing the canvas with an algebraic formula may be a mere marketing ploy, but the sale—and the ascent of AI works in general—is raising important questions about authorship. If a work is conceived by a human but created by a machine, who owns the copyright? And what happens in a dystopian, but potentially real, scenario where there is no human interaction at all? Could an AI then enjoy copyright privileges? After all, Sophia the AI robot was given citizenship status in Saudi Arabia last year.

Who holds the keys to the code?

As with literary works, a source code automatically qualifies for copyright protection under UK and EU laws—the copyright holder for something that has effectively been created by a machine would usually be the person who writes the software. As the US artist and digital art collector Jason Bailey says: “The process of coding generative art [is] similar to painting or sketching.”

However, there is an important distinction between the source code and the algorithm that produced the Belamy portrait and ten other pictures in the fictional Belamy family collection. Pierre Fautrel, one of the three members of Obvious, the Paris-based collective behind the paintings, says they decided not to copyright their code because they were advised that to do so would be too costly. According to Dehns, a law firm specialising in patents and trademarks, obtaining worldwide patents can cost as much as £10,000.

The question is whether Obvious will freely share their source code with the world at large. For the moment, the answer is no. “Once we have created our second collection, then we will openly publish the code to the Belamy family collection,” Fautrel says.

Fellow member Gauthier Vernier sees the portraits as a collaboration between the algorithm and Obvious. “In that sense, you could say the copyright should be shared, but currently there is no legal framework in which to consider the algorithm as an author,” he says.

What could AI law cover?

The Belamy pictures were produced using an algorithm composed of two parts: a generator and a discriminator. Obvious fed the system with a data set of 15,000 portraits painted between the 14th and 20th centuries, crucially all out of copyright. The generator then made new images based on the set and the discriminator reviewed each one, trying to determine if it was created by human hand or by the generator.

The portraits are further protected because, as Vernier explains, the algorithm won’t produce the same result twice, even if trained on the same dataset, so each painting is unique. “The fact that we use a physical medium that we hand-sign ensures that the works cannot be replicated. So, we focused on protecting the final product, not the algorithm,” he says.

The market for AI works is still very much in its infancy, although slowly gaining momentum. As it continues to grow, the question of copyright versus open source could become a real sticking point. “If we start seeing works sell for tens of thousands or hundreds of thousands of dollars, does that shut down open-source in these communities?” Bailey says.

Similarly, as Tim Maxwell of the London law firm Boodle Hatfield points out, developments in art generated by AI are “pushing right up against the edge of what copyright laws currently cater for”. The biggest issue, Maxwell says, concerns the “human input element” of the work. “Until now, computers have been the means of producing the work rather than the creative origin—something they could conceivably become,” he says, adding that although we are not at that point yet, a work produced autonomously by a computer would prove a “very difficult question”.

For now, at least, it seems the question of autonomy is squarely in the hands of humans. But for how long?

After publication, Obvious sent the following clarification: The algorithm is the GAN, so there is no question over patenting it because it is common knowledge. We did not invent it, Ian Goodfellow did. We wrote the code, which could potentially be patentable, but very expensive and arguably useless, so we decided against it.