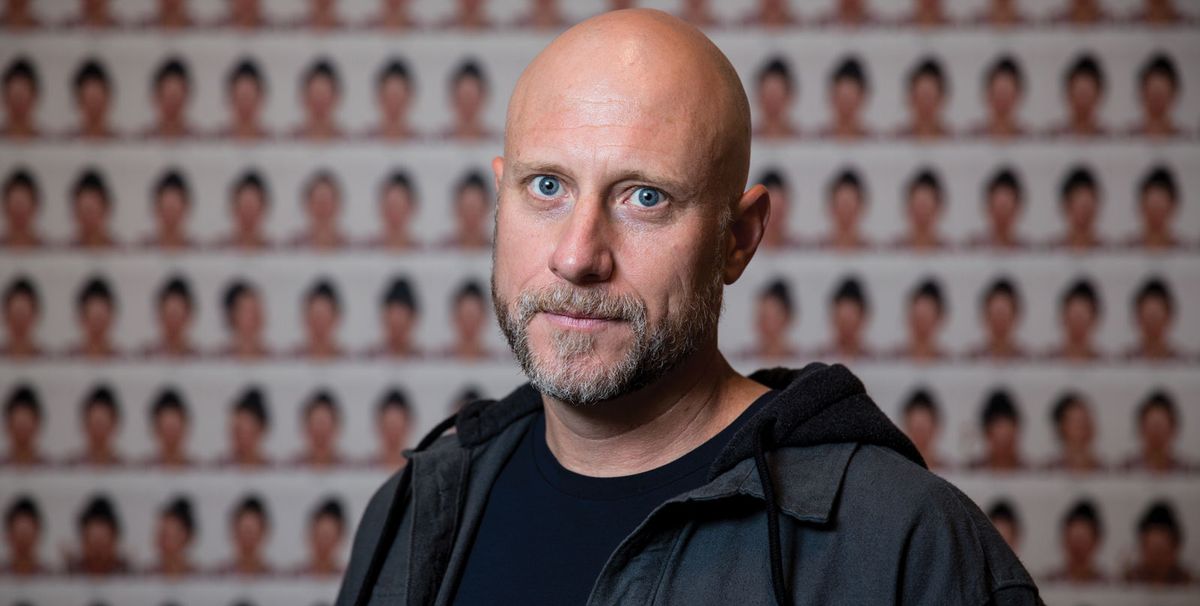

It is a busy year for Trevor Paglen. The Berlin-based US artist, who was awarded a $625,000 MacArthur genius grant last October, is not resting on his laurels. This month, Trevor Paglen: Sites Unseen (21 June-6 January 2019) opens at the Smithsonian American Art Museum in Washington, DC—his first major career retrospective and one of the biggest solo shows the museum has ever given to a contemporary artist. The exhibition includes works from throughout his career, from his atmospheric photographs of military installations, spy satellites and underwater internet cables, to his sculptures that give a physical presence to abstract or secret ideas, such as a cube made from irradiated glass in Fukushima or the talisman-like “challenge coins” circulated among the intelligence community. More recently, Paglen has started to examine the often surrealistic vision systems created for artificial intelligence (AI) and he is also collaborating with the Kronos Quartet on a performance that mixes their music with computer visualisations. And this autumn, he is literally launching a sculpture into space on a Falcon 9 rocket. We spoke with the artist from his studio in Berlin.

The Art Newspaper: For an artist like you, who works with themes of surveillance and hidden mechanisms and power structures, having a show in Washington, DC...

Trevor Paglen: In a federal institution.

...in a federal institution, what’s that like?

It’s a lot of paperwork.

Do you feel at all like you’re getting into the belly of the beast?

No, not at all. I expect there to be a lot of interest because so many people that work there are, to one extent or another, involved with some of the programmes that my work touches on. So I think it’ll be a really interesting audience because there will be a lot of people for whom the things my work is about are a part of their everyday lives.

I’ve had that happen pretty regularly, that there is a lot of interest from places like the intelligence community and the military because I’m looking at things that they’re involved with, in the same way that if you made an exhibition about plumbing, maybe plumbers would be interested in it, you know?

Trevor Paglen, Reaper Drone (Indian Springs, NV; Distance ~ 2 miles) (2010) C-print and photo: Trevor Paglen. Image courtesy of the artist; Metro Pictures, New York; Altman Siegel Gallery, San Francisco

What has it been like to revisit some of your earlier works?

For me, all the works are connected; there is a pretty clear thread through everything. It’s been fun to go back and work with pieces that I haven’t worked with for a long time. And to have a moment where you can look at everything together, which I’ve never done before really, and realise: “OK, I’ve been doing this for a while.”

It’s also about making connections, setting up juxtapositions between different bodies of work in ways that I would not have expected. In other words, the exhibition is not really chronological at all. There are different threads; one is thinking about landscape just as a form, another is about the sky as a kind of landscape.

The show has really early photographs that I was taking of different secret military bases using telescopes. Those are the kind of images that are falling apart. And I did a lot of work around looking at mass surveillance infrastructures, like NSA [National Security Agency] infrastructures and undersea cables, and looking at spy satellites and drones in the skies, and the skies through computer vision systems. There are certain kinds of tropes that I keep returning to, looking at them through different lenses, as it were, both literally and metaphorically. So it’s been fun to see all of those in relation to each other.

You’ve been working with artificial intelligence (AI) lately, creating images based on how AI sees the world. How are those connected to the earlier photographic work?

One continuous theme has been thinking about the relationship between technologies of seeing and power, and how autonomous vision systems literally see the world. With the AI works, it’s almost like saying: “Let’s see what the world looks like from the vantage point of that spy satellite. Let’s see what the world looks like from the vantage point of the artificial intelligence that’s trying to understand all of the images or all the metadata that’s running through the undersea cables.” So the computer vision works are almost changing that point of view of the observer to the machine itself, whereas most of my works have been very consciously made from the vantage point of a person somewhere, looking at something.

A human person.

Yeah, exactly, a figure standing somewhere looking at something. And that’s predominantly the vantage point that I use. So the machine vision works are different, obviously, in that sense because some of them are from the vantage point of a person, but it’s always a non-human person that’s looking at the image, or an algorithm. And then, of course, you’re making an image that’s viewable by humans, but it’s asking you as a viewer to imagine that you are a machine.

Trevor Paglen, Shoshone Falls, Hough Transform; Haar (2017), silver gelatin print and photo: Trevor Paglen. Image courtesy of the artist; Metro Pictures, New York; Altman Siegel Gallery, San Francisco

It’s more sympathetic in a way because when you’re looking at a landscape or a system the person is removed, whereas with the AI works, you draw a connection with how our eyes interpret visual data for our brains.

Exactly. A big part of that work is trying to show you how autonomous visions see differently than humans and what kinds of abstractions a computer vision system is making out of an image. How are those abstractions different than the kind of abstractions we make out of images when we look at them?

You’re also working with the Kronos Quartet on a live performance called Sight Machine that you first did in San Francisco last year.

That was a one-off show, and we thought it was really successful and decided that we wanted to keep doing it. We basically retooled the entire show to turn it into a version that we can take on the road, that can travel. The whole basement of my studio is a giant setup for that right now.

In the performance, the Kronos Quartet is playing and there are about 20 cameras on stage with them, looking at them from various angles. And those cameras are all attached to a custom computer vision system that we built, that allows us to take all kinds of artificial intelligence algorithms and run the feeds from the cameras through that system. Then we project—behind the quartet, as they’re playing—a vision of the show as seen through the software. So you’re watching the performance, and at the same time you’re watching the performance as seen through different computer systems.

There is a huge range of these systems that you encounter over the course of the show. What does the Kronos Quartet look like as seen through a guided missile? What do they look like as seen through a self-driving car? What do they look like as seen through an artificial intelligence that’s trying to figure out their age, or their gender, or their emotional state?

Is the visualisation different every time because the performance is always different?

It’s different every time in the same way that every music performance is a little bit different, but it’s the same music that’s always played. And we have a score also for the computer vision that maps on to the music.

Do you see AI as a positive force in the future or do you think it’s dangerous?

I’ve been talking about this quite a lot recently. I think there are always political structures that are built into infrastructures. In the case of AI, the way that AI works in real life is that it can only function at massive scales. Like when we’re talking about AI, we’re really only talking about seven companies in the world that are able to do it at that scale—Amazon, Google, Facebook, Baidu, Microsoft, a couple more.

You need tremendous amounts of data in order to really make AI sing and make it do useful stuff. And because you need that volume of data, you can really only do it if you have an infrastructure that is basically a planetary-scale surveillance infrastructure. And then you have to have the ability to consolidate that information in centralised places, ie corporations or states. And so, given those two facts, the politics of the infrastructure is one of extreme centralisation of power in either nation-states, which China would be a good example of, or in corporations, like the Facebooks and Amazons.

Trevor Paglen, Vampire (Corpus: Monsters of Capitalism) Adversarially Evolved Hallucination (2017), dye sublimation print and photo: Trevor Paglen. Image courtesy of the artist; Metro Pictures, New York; Altman Siegel Gallery, San Francisco

On top of that is the extent to which artificial intelligence is built on a bedrock of human labour practices. In other words, if you’re sorting millions or tens of millions of images in order to train AI systems, people have to go and look at all of those images, and tag them, and flag them. And that’s done through things like [the crowdsourcing platform] Amazon Mechanical Turk, that’s usually piecemeal work that’s farmed out to developing countries and pays very little.

So there is this whole layer of labour undergirding the whole system, and again it’s a very precarious kind of labour that it’s built on.

AI’s very creation comes with these ethical issues.

Yeah, the ethical issues are embedded in the infrastructure. I think that there are some places where AI could be helpful, for example in energy consumption or efficiency; there are probably some applications in healthcare where it can probably do some good. But in the vast majority of cases, I think that the power of AI is going to be wielded and taken advantage of by very few people that are going to benefit from it.

They Watch the Moon Trevor Paglen

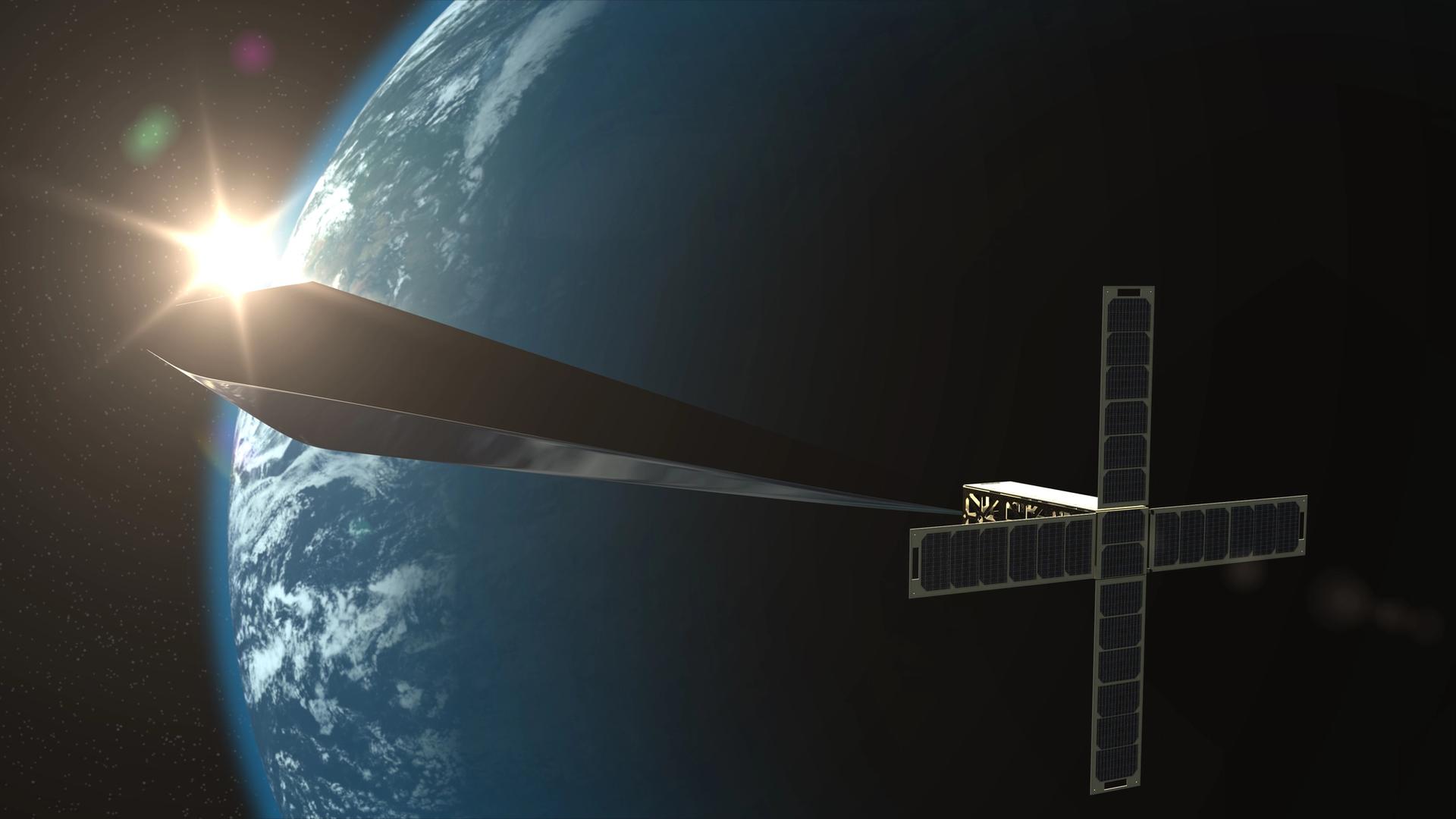

And how is Orbital Reflector, the sculpture you’re sending into space, going?

It’s going well. The week before last we did a lot of the testing on it. We put it on a machine that simulates the vibrations associated with the launch, and so we made sure that it would survive that and the environment in space where you have these huge temperature fluctuations based on whether you’re in the sun or in the shadows. You call it a shake-and-bake test—you shake it up like it’s on a rocket launch, and then you bake it; you cycle it through extreme temperature variations from about 200°F to -200°F. It seems good to go, so at the end of June we’ll be shipping it off to the launch provider and it’s going to get put on the part of the rocket that will actually deploy it. Then that will get integrated into the rocket I think some time over the summer. It was supposed to launch in August, but now it’s been delayed, so our new launch window is October. Hopefully it won’t be the same date as Sight Machine.

Why the delay?

Don’t know. Just, you know, space stuff.

Like solar flares or something like that?

No, I think it’s something much more pedestrian, like somebody who probably paid a lot more money than we did to be on the rocket isn’t ready to go yet.

Design concept rendering for Trevor Paglen: Orbital Reflector, co-produced and presented by the Nevada Museum of Art Courtesy of Trevor Paglen and Nevada Museum of Art

Once the work is actually in orbit you’ll be able to view it from...?

Anywhere, basically. Once the rocket goes up into space and it’s deployed, then my small satellite, which is about the size of a brick, inflates into a giant diamond-shaped mirror that’s about 100ft long and 5ft tall. And what that mirror will do is reflect sunlight down to earth, so you’ll be able to see it in the sky. It’ll be about as bright as one of the stars in the big dipper.

If you want to see it, you can go to our website, or you can download an app where you can say: “I’m in New York City”. And it’ll say: “OK, well, go out at 9.15 at night and look in this part of the sky. And we’ll show you where to see it.” The idea is to host viewing parties at different museums or observatories around the world, where we’ll just have a night looking at the stars together. And we’ll look at the sculpture, but we’ll also probably end up looking at some of the planets and some of the other weird denizens of the night sky.